For structured (bounding box based) text extraction, it becomes imperative that the received image and target image are aligned properly and to scale. OpenCV is a great image processing library that has a ton of features.

To align source and template images, following steps are required.

- First convert images to gray scale

- Detect features in images

- Match descriptor features in source and template images(keep the first half of matches, sorted by distance of match, the lower the better)

- Use the matches' query, train indexes to find source and template points to align

- find the homography matrix between source and template points

- Use the matrix obtained above to do a perspectice transform on the source image to transform it into the target image.

The algorithms used for each stage are mentioned below.

- Detecting Features: ORB detector, Note that this detector is faster and also does not require a license like SIFT and SURF do.

- For matching features, an LSH Index based FLANN algorithm is used.

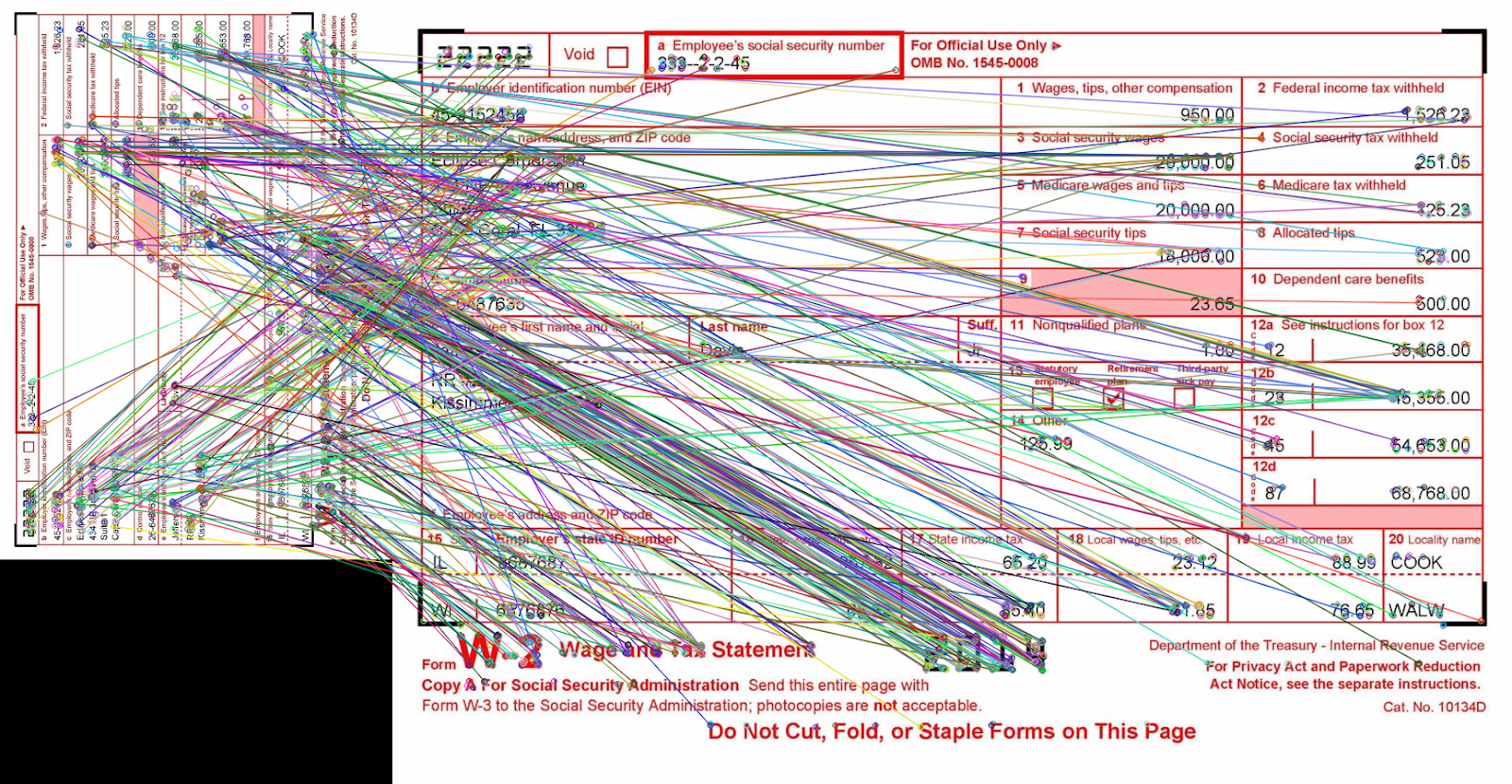

Let's look at the matches found between two w2 images of different scale and orientation using drawMatches function.

The code for template matching is mentioned below.

def align_image_with_template(img_to_match_loc,

template_img_loc,

max_features=500):

img_to_match = cv2.imread(img_to_match_loc)

template_img = cv2.imread(template_img_loc)

#gray scale conversion

img_to_match_gray = cv2.cvtColor(img_to_match, cv2.COLOR_BGR2GRAY)

template_img_gray = cv2.cvtColor(template_img, cv2.COLOR_BGR2GRAY)

#detect features

orb = cv2.ORB.create(nfeatures=max_features)

kp_to_match, des_to_match = orb.detectAndCompute(img_to_match_gray, None)

kp_temp, des_temp = orb.detectAndCompute(template_img_gray, None)

#Flann based feature matching

#specify algorithm

FLAN_INDEX_LSH = 6

index_params = dict(

algorithm=FLAN_INDEX_LSH,

table_number=8,

key_size=12,

multi_probe_level=1)

search_params = dict(checks=60)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.match(des_to_match, des_temp)

matches=sorted(matches,key=lambda x:x.distance)

matches=matches[:int((len(matches))/2)]

img_matches = cv2.drawMatches(img_to_match, kp_to_match, template_img,

kp_temp, matches,None)

cv2.imwrite("data/matches.png", img_matches)

src_pts = np.float32(

[kp_to_match[m.queryIdx].pt for m in matches]).reshape(-1, 1, 2)

dst_pts = np.float32([kp_temp[m.trainIdx].pt for m in matches]).reshape(

-1, 1, 2)

M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)

height, width = template_img.shape[:2]

img_aligned = cv2.warpPerspective(img_to_match, M, (width, height))

return img_aligned

comments powered by Disqus